Led by Prof. Zhiqiang Zheng, our NuBot team was founded in 2004. Currently we have two full professors (Prof. Zhiqiang Zheng and Prof. Hui Zhang), one associate professor (Prof. Huimin Lu), one assistant professor (Dr. Junhao Xiao), and several graduate students. Till now, 8 team members have obtained their doctoral degree with the research on RoboCup Middle Size League (MSL), and more than 20 have obtained their master degrees. For more detail of each member please see NuBoters.

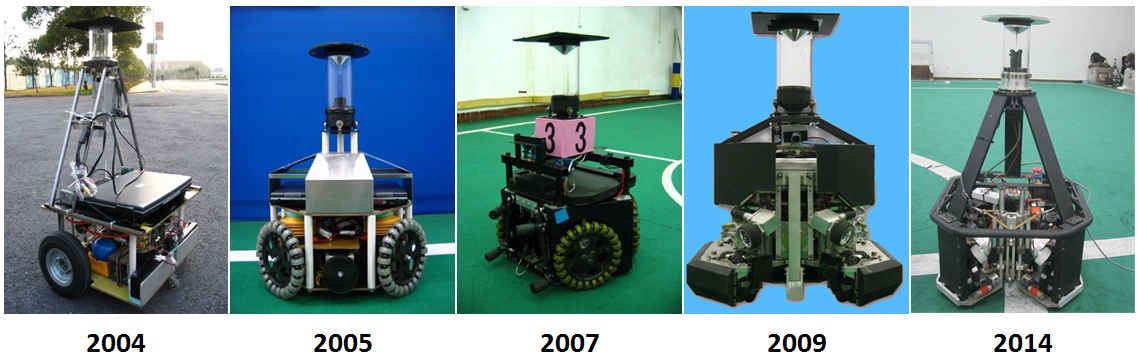

As shown in the figure below, five generations of robots have been created since 2004. We participated in RoboCup Simulation and Small Size League (SSL) initially. Since 2006, we have been participating in RoboCup MSL actively, e.g., we have been to Bremen, Germany (2006), Atlanta, USA (2007), Suzhou, China (2008), Graz, Austria (2009), Singapore (2010), Eindhoven, Netherlands (2013), Joao Pessoa, Brazil (2014), Hefei, China (2015), Leipzig Germany (2016), Nagoya Japan(2017), Montréal Canada(2018) and Sydney Australia(2019) . We have also been participating in RoboCup China Open since it was launched in 2006.

The NuBot robots have been employed not only for RoboCup, but also for other research as an ideal test bed more than robot soccer. As a result, we have published more than 70 journal papers and conference papers. For more detail please see the publication list. Our current research mainly focuses on multi-robot coordination, robust robot vision and formation control.

The following items are our team description papers (TDPs) which illustrates our research progress over the past years.

This video is the accompanying video of the paper:Xiao Li, Bingxin Han, Zhiwen Zeng, Junhao Xiao, Huimin Lu. Human-Robot Interaction Based on Battle Management Language for Multi-robot System

Abstract: Commanding and controlling a multi-robot system is a challenging task. Static control commands are difficult to fully meet the requirements of controlling different robots. As the number of robots increases, it is difficult for the robot's motion-level commands to simultaneously satisfy the demands of commanding multi-robot system. This paper uses a limited natural language to control multi-robot systems, and proposes a framework based on Battle Management Language (BML) to command multi-robot systems. Based on the framework, the capabilities and names of the robot can be dynamically added to the dictionary, and the limited natural language can be converted into a standard BML command according to the dictionary to control the multi-robot system. In this way, the robot can execute motion-level commands, such as movement, steering, etc., and can also perform task-level commands, such as enclosing, defense, etc. The experimental results show that the system composed of different types of robots can be commanded by using the interactive framework proposed in this paper.

The team description paper can be downloaded from here, with the main contribution of a newly designed three-wheel robot.

[1] Wei Dai, Huimin Lu, Junhao Xiao and Zhiqiang Zheng. Task Allocation without Communication Based on Incomplete Information Game Theory for Multi-robot Systems. Journal of Intelligent & Robotic Systems, 2018. [PDF]

4th place in MSL scientific challenge in RoboCup 2018, Montréal, Canada

3rd place in MSL technique challenge in RoboCup 2018, Montréal, Canada

4th place in MSL of RoboCup 2018, Montréal, Canada

2nd place in MSL of RoboCup 2018 ChinaOpen, ShaoXing, China

2nd place in MSL technique challenge of RoboCup 2018 ChinaOpen, ShaoXing, China

3rd place in MSL scientific challenge in RoboCup 2017, Nagoya, Japan

3rd place in MSL technique challenge in RoboCup 2017, Nagoya, Japan

4th place in MSL of RoboCup 2017, Nagoya, Japan

3rd place in MSL of RoboCup 2017 ChinaOpen, RiZhao, China

1st place in MSL scientific challenge of RoboCup 2016 ChinaOpen, RiZhao, China

3rd place in MSL scientific challenge in RoboCup 2016, Leipzig, Germany

4th place in MSL of RoboCup 2016, Leipzig, Germany

3rd place in MSL of RoboCup 2016 ChinaOpen, Hefei, China

1st place in MSL scientific challenge of RoboCup 2016 ChinaOpen, Hefei, China

4. Qualification video

The qualification video for RoboCup 2019 Sydney, Australia can be found at our youku channel(recommended for users in China) or our YouTube channel (recommended for users out of China).

5. Mechanical and Electrical Description and Software Flow Chart

NuBot Team Mechanical and Electrical Description together with a Software Flow Chart can be downloaded from here.

Zhiqian Zhou, one member of Nubot team, served to MSL community as a member of OC RoboCup 2019 Sydney, Australia.

7. Declaration regarding mixed team

No!

8. Declaration regarding 802.11b AP

No!

9. MAC address

The list of our team's MAC addresses can be downloaded from here.

This video is about the experimental results of the following paper: Yi Li, Chenggang Xie, Huimin Lu, Xieyuanli Chen, Junhao Xiao and Hui Zhang. Scale-aware Monocular SLAM Based on Convolutional Neural Network. Proceedings of the 15th IEEE International Conference on Information and Automation 2018 ( ICIA 2018 ), Mount Wuyi, 2018.

Abstract—Remarkable performance has been achieved using the state-of-the-art monocular Simultaneous Localization and Mapping (SLAM) algorithms. However, due to the scale ambiguity limitation of monocular vision, the existing monocular SLAM systems can not directly restore the absolute scale in unknown environments. Given the amazing results in the field of depth estimation from Convolutional Neural Networks (CNNs), we propose a CNN-based monocular SLAM, where we naturally combine the CNN-predicted depth maps together with the monocular ORB-SLAM, overcoming the scale ambiguity limitation of the monocular SLAM. We test our method using the popular KITTI odometry benchmark, and the experimental results show that the overall performance of average translational and rotational error can reach 2.00% and 0.0051º/m. In addition, our approach can work well under the pure rotation motion, which shows the robustness and high accuracy of the proposed algorithm.

Abstract— Most robots in urban search and rescue (USAR) fulfill tasks teleoperated by human operators. The operator has to know the location of the robot and find the position of the target (victim). This paper presents an augmented reality system using a Kinect sensor on a customly designed rescue robot. Firstly, Simultaneous Localization and Mapping (SLAM) using RGB-D cameras is running to get the position and posture of the robot. Secondly, a deep learning method is adopted to obtain the location of the target. Finally, we place an AR marker of the target in the global coordinate and display it on the operator's screen to indicate the target even when the target is out of the camera’s field of view. The experimental results show that the proposed system can be applied to help humans interact with robots.

This video is the accompanying video of the paper: Junchong Ma, Weijia Yao, Wei Dai, Huimin Lu, Junhao Xiao, Zhiqiang Zheng. Cooperative Encirclement Control for a Group of Targets by Decentralized Robots with Collision Avoidance. Proceedings of the 37th Chinese Control Conference, 2018.

Abstract: This study focuses on multi-target capture and encirclement control problem for multiple mobile robots. With the distributed architecture, this problem involves a group of robots to encircle several moving targets in a coordinated circle formation. In order to efficiently allocate the targets to robots, a Hybrid Dynamic Task Allocation (HDTA) algorithm was proposed, in which a temporary "manager" robot was assigned to negotiate with other robots. For encirclement formation, a robust control law was introduced for any number of mobile robots to form a specific circle formation with arbitrary inter-robot angular spacing. In view of safety, an online collision avoidance algorithm combining the sub-targets and Artificial Potential Fields (APF) approaches was proposed, which ensures that the paths of robots are collision-free. To prove the validity and robustness of the proposed scheme, both theoretical analysis and simulation experiments were conducted.

The team description paper can be downloaded from here, with the main contribution of a newly designed three-wheel robot.

[1] Wei Dai, Huimin Lu, Junhao Xiao and Zhiqiang Zheng. Task Allocation without Communication Based on Incomplete Information Game Theory for Multi-robot Systems. Journal of Intelligent & Robotic Systems, 2018. [PDF]

3rd place in MSL scientific challenge in RoboCup 2017, Nagoya, Japan

3rd place in MSL technique challenge in RoboCup 2017, Nagoya, Japan

4th place in MSL of RoboCup 2017, Nagoya, Japan

3rd place in MSL of RoboCup 2017 ChinaOpen, RiZhao, China

1st place in MSL scientific challenge of RoboCup 2016 ChinaOpen, RiZhao, China

3rd place in MSL scientific challenge in RoboCup 2016, Leipzig, Germany

4th place in MSL of RoboCup 2016, Leipzig, Germany

3rd place in MSL of RoboCup 2016 ChinaOpen, Hefei, China

1st place in MSL scientific challenge of RoboCup 2016 ChinaOpen, Hefei, China

2rd place in MSL technique challenge in RoboCup 2015, Hefei, China

3rd place in MSL scientific challenge in RoboCup 2015, Hefei, China

6th place in MSL of RoboCup 2015, Hefei, China

4. Qualification video

The qualification video for RoboCup 2018 Montreal, Canada can be found at our youku channel (recommended for users in China) or our YouTube channel (recommended for users out of China).

5. Mechanical and Electrical Description and Software Flow Chart

NuBot Team Mechanical and Electrical Description together with a Software Flow Chart can be downloaded from here.

This video is the accompanying video of the paper: Yi Liu, Yuhua Zhong, Xieyuanli Chen, Pan Wan, Huimin Lu, Junhao Xiao, Hui Zhang, The Design of a Fully Autonomous Robot System for Urban Search and Rescue, Proceedings of the 2016 IEEE International Conference on Information and Automation, 2016.

Abstract: Autonomous robots in urban search and rescue (USAR) have to fulfill several tasks at the same time: localization, mapping, exploration, object recognition, etc. This paper describes the whole system and the underlying research of the NuBot rescue robot for participating RoboCup Rescue competition, especially in exploring the rescue environment autonomously. A novel path following strategy and a multi-sensor based controller are designed to control the robot for traversing the unstructured terrain. The robot system has been successfully applied and tested in the RoboCup Rescue Robot League (RRL) competition and won the championship of 2016 RoboCup China Open RRL competition.

This video is the accompanying video for the following paper: Huimin Lu, Junhao Xiao, Lilian Zhang, Shaowu Yang, Andreas Zell. Biologically Inspired Visual Odometry Based on the Computational Model of Grid Cells for Mobile Robots. Proceedings of the 2016 IEEE Conference on Robotics and Biomimetics, 2016.

Abstract: Visual odometry is a core component of many visual navigation systems like visual simultaneous localization and mapping (SLAM). Grid cells have been found as part of the path integration system in the rat's entorhinal cortex, and they provide inputs for place cells in the rat's hippocampus. Together with other cells, they constitute a positioning system in the brain. Some computational models of grid cells based on continuous attractor networks have also been proposed in the computational biology community, and using these models, self-motion information can be integrated to realize dead-reckoning. However, so far few researchers have tried to use these computational models of grid cells directly in robot visual navigation in the robotics community. In this paper, we propose to apply continuous attractor network model of grid cells to integrate the robot's motion information estimated from the vision system, so a biologically inspired visual odometry can be realized. The experimental results show that good dead-reckoning can be achieved for different mobile robots with very different motion velocities using our algorithm. We also implement a full visual SLAM system by simply combining the proposed visual odometry with a quite direct loop closure detection derived from the well-known RatSLAM, and comparable results can be achieved in comparison with RatSLAM.

Real-time Terrain Classification for Rescue Robot Based on Extreme Learning Machine

Yuhua Zhong, Junhao Xiao, Huimin Lu and Hui Zhang

Full autonomous robots in urban search and rescue (USAR) have to deal with complex terrains. The real-time recognition of terrains in front could effectively improve the ability of pass for rescue robots. This paper presents a real-time terrain classification system by using a 3D LIDAR on a custom designed rescue robot. Firstly, the LIDAR state estimation and point cloud registration are running in parallel to extract the test lane region. Secondly, normal aligned radial feature (NARF) is extracted and downscaled by a distance based weighting method. Finally, an extreme learning machine (ELM) classifier is designed to recognize the types of terrains. Experimental results demonstrate the effectiveness of the proposed system.

Video

The video can be found here if the below link does not work.