Led by Prof. Zhiqiang Zheng, our NuBot team was founded in 2004. Currently we have two full professors (Prof. Zhiqiang Zheng and Prof. Hui Zhang), one associate professor (Prof. Huimin Lu), one assistant professor (Dr. Junhao Xiao), and several graduate students. Till now, 8 team members have obtained their doctoral degree with the research on RoboCup Middle Size League (MSL), and more than 20 have obtained their master degrees. For more detail of each member please see NuBoters.

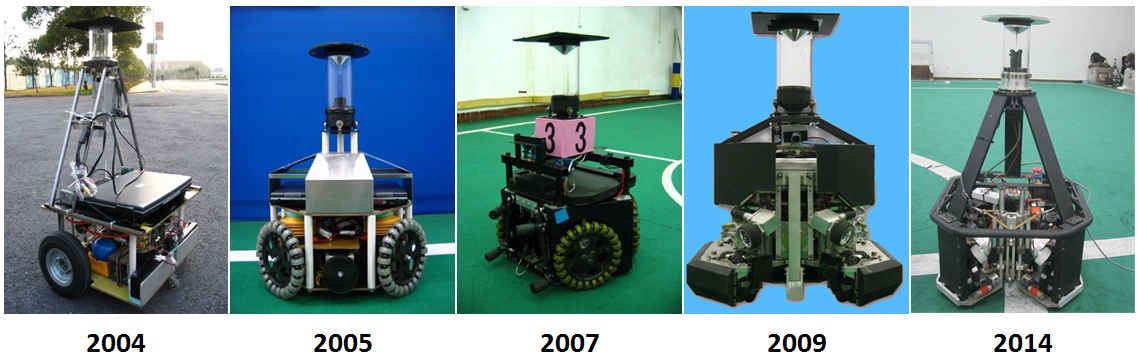

As shown in the figure below, five generations of robots have been created since 2004. We participated in RoboCup Simulation and Small Size League (SSL) initially. Since 2006, we have been participating in RoboCup MSL actively, e.g., we have been to Bremen, Germany (2006), Atlanta, USA (2007), Suzhou, China (2008), Graz, Austria (2009), Singapore (2010), Eindhoven, Netherlands (2013), Joao Pessoa, Brazil (2014), Hefei, China (2015), Leipzig Germany (2016), Nagoya Japan(2017), Montréal Canada(2018) and Sydney Australia(2019) . We have also been participating in RoboCup China Open since it was launched in 2006.

The NuBot robots have been employed not only for RoboCup, but also for other research as an ideal test bed more than robot soccer. As a result, we have published more than 70 journal papers and conference papers. For more detail please see the publication list. Our current research mainly focuses on multi-robot coordination, robust robot vision and formation control.

The following items are our team description papers (TDPs) which illustrates our research progress over the past years.

This video is the accompanying video for the paper: Yuxi Huang, Ming Lv, Dan Xiong, Shaowu Yang, Huimin Lu, An Object Following Method Based on Computational Geometry and PTAM for UAV in Unknown Environments. Proceedings of the 2016 IEEE International Conference on Information and Automation, 2016.

Abstract: This paper introduces an object following method based on the computational geometry and PTAM for Unmanned Aerial Vehicle(UAV) in unknown environments. Since the object is easy to move out of the field of view(FOV) of the camera, and it is difficult to make it back to the field of camera view just by relative attitude control, we propose a novel solution to re-find the object based on the visual simultaneous localization and mapping (SLAM) results by PTAM. We use a pad as the object which includes a letter H surrounded by a circle. We can get the 3D position of the center of the circle in camera coordinate system using the computational geometry. When the object moves out of the FOV of the camera, the Kalman filter is used to predict the object velocity, so the pad can be searched effectively. We demonstrate that the ambiguity of the pad's localization has little impact on object following through experiments. The experimental results also validate the effectiveness and efficiency of the proposed method.

This video is the accompanying video for the following paper: Weijia Yao, Zhiwen Zeng, Xiangke Wang, Huimin Lu, Zhiqiang Zheng. Distributed Encirclement Control with Arbitrary Spacing for Multiple Anonymous Mobile Robots. Proceedings of the 36th Chinese Control Conference, 2017.

Abstract: Encirclement control enables a multi-robot system to rotate around a target while they still preserve a circular formation, which is useful in real world applications such as entrapping a hostile target. In this paper, a distributed control law is proposed for any number of anonymous and oblivious robots in random three dimensional positions to form a specified circular formation with any desired inter-robot angular distances (i.e. spacing) and encircle around the target. Arbitrary spacing is useful for a system composed of heterogeneous robots which, for example, possess different kinematics capabilities, since the spacing can be designed manually for any specific purpose. The robots are modelled by single-integrator models, and they can only sense the angular positions of their two neighboring robots, so the control law is distributed. Theoretical analysis and simulation results are provided to prove the stability and effectiveness of the proposed control strategy.

This video is about the experimental results of the following paper: Xieyuanli Chen, Huimin Lu, Junhao Xiao, Hui Zhang, Pan Wang. Robust relocalization based on active loop closure for real-time monocular SLAM. Proceedings of the 11th International Conference on Computer Vision Systems (ICVS), 2017.

Abstract. Remarkable performance has been achieved using the state-of-the-art monocular Simultaneous Localization and Mapping (SLAM) algorithms. However, tracking failure is still a challenging problem during the monocular SLAM process, and it seems to be even inevitable when carrying out long-term SLAM in large-scale environments. In this paper, we propose an active loop closure based relocalization system, which enables the monocular SLAM to detect and recover from tracking failures automatically even in previously unvisited areas where no keyframe exists. We test our system by extensive experiments including using the most popular KITTI dataset, and our own dataset acquired by a hand-held camera in outdoor large-scale and indoor small-scale real-world environments where man-made shakes and interruptions were added. The experimental results show that the least recovery time (within 5ms) and the longest success distance (up to 46m) were achieved comparing to other relocalization systems. Furthermore, our system is more robust than others, as it can be used in different kinds of situations, i.e., tracking failures caused by the blur, sudden motion and occlusion. Besides robots or autonomous vehicles, our system can also be employed in other applications, like mobile phones, drones, etc.

This video is about the experimental results of the following paper: Xieyuanli Chen, Hui Zhang, Huimin Lu, Junhao Xiao, Qihang Qiu and Yi Li. Robust SLAM system based on monocular vision and LiDAR for robotic urban search and rescue. Proceedings of the 15th IEEE International Symposium on Safety, Security, and Rescue Robotics 2017 (SSRR 2017), Shanghai, 2017

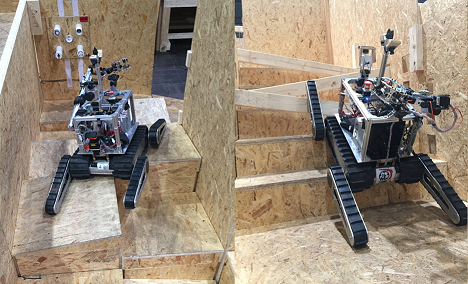

Abstract. In this paper, we propose a monocular SLAM system for robotic urban search and rescue (USAR). Based on it, most USAR tasks (e.g. localization, mapping, exploration and object recognition) can be fulfilled by rescue robots with only a single camera. The proposed system can be a promising basis to implement fully autonomous rescue robots. However, the feature-based map built by the monocular SLAM is difficult for the operator to understand and use. We therefore combine the monocular SLAM with a 2D LiDAR SLAM to realize a 2D mapping and 6D localization SLAM system which can not only obtain a real scale of the environment and make the map more friendly to users, but also solve the problem that the robot pose cannot be tracked by the 2D LiDAR SLAM when the robot climbing stairs and ramps. We test our system using a real rescue robot in simulated disaster environments. The experimental results show that good performance can be achieved using the proposed system in the USAR. The system has also been successfully applied in the RoboCup Rescue Robot League (RRL) competitions, where our rescue robot team entered the top 5 and won the Best in Class Small Robot Mobility in 2016 RoboCup RRL Leipzig Germany, and the champions of 2016 and 2017 RoboCup China Open RRL.

Supported by National University of Defense Technology, our team has designed the NuBot rescue robot from the mechanical structure to the electronic architecture and software system. Benefiting from the strong mechanical structure, our rescue robot has good mobility and is quite durable, so it will not be trapped even facing the highly cluttered and unstructured terrains in the urban search and rescue. The electronic architecture is built based on industrial standards which can bear electromagnetic interference and physical impact from the intensive tasks. The software system is developed upon the Robot Operating System (ROS). Based on self-developed programs and several basic open source packages provided in the ROS, we developed a complete software system including the localization, mapping, exploration, object recognition, etc. Our robot system has been successfully applied and tested in the RoboCup Rescue Robot League (RRL) competitions, where our rescue robot team entered the top 5 and won the Best in Class small robot mobility in 2016 RoboCup RRL Leipzig Germany, and won the champions of 2016 and 2017 RoboCup China Open RRL competitions.

The following pictures show that our rescue robot participated in RoboCup 2016 RRL competition.

1. Real Robot Code

nubot_ws: https://github.com/nubot-nudt/nubot_ws

2. Simulation System Based on ROS and Gazebo

Single robot simulation demo: https://github.com/nubot-nudt/single_nubot_gazebo

Multi-robot simulation: https://github.com/nubot-nudt/gazebo_visual

Simatch for China Robot Competition: https://github.com/nubot-nudt/simatch

Note: The last option is an integration of every components needed for a complete simulation. So it is recommended to download it for multi-robot coordination research. There are English documentation and some Chinese comments.

3. Coach for Simulation

coach_ws: https://github.com/nubot-nudt/coach4sim

Anyone is welcome to download and use them. :)

1. Team description Paper

The team description paper can be downloaded at here, with the main contribution of a newly designed three-wheel robot.

2. 5 Papers in recent 5 years

[1] Dai, W., Yu, Q., Xiao, J., & Zheng, Z., Communication-less Cooperation between Soccer Robots. In 2016 RoboCup Symposium, Leipzig, Germany. [PDF]

[2] Xiong, D., Xiao, J., Lu, H., et al, The design of an intelligent soccer-playing robot, Industrial Robot: An International Journal, 43(1): 91-102, 2016. [PDF]

[3] Yao, W., Dai, W., Xiao, J., Lu, H., & Zheng, Z. (2015). A Simulation System Based on ROS and Gazebo for RoboCup Middle Size League, IEEE Conference on Robotics and Biomimetics, Zhuhai, China. [PDF]

[4] Lu, H., Yu, Q., Xiong, D., Xiao, J., & Zheng, Z. (2015). Object Motion Estimation Based on Hybrid Vision for Soccer Robots in 3D Space. In RoboCup 2014: Robot World Cup XVIII (pp. 454-465). Springer International Publishing. [PDF]

[5] Lu, H., Li, X., Zhang, H., Hu, M., & Zheng, Z. Robust and Real-time Self-localization Based on Omnidirectional Vision for Soccer Robots. Advanced Robotics, 27(10): 799-811, 2013. [PDF]

3. Results and awards in recent 3 years

2016

2015

2014

4. Qualification video

The qualification video for RoboCup 2017 Nagoya, Japan should be shown below. If it does not appear, it can be found at our youku channel (recommended for users in China) or our youtube channel (recommended for users out of China).

5. Mechanical and Electrical Description and Software Flow Chart

NuBot Team Mechanical and Electrical Description together with a Software Flow Chart can be downloaded here.

6. Contributions to the RoboCup MSL community